Custom Alerts in ROSA 4.11.x

This content is authored by Red Hat experts, but has not yet been tested on every supported configuration.

Starting with OpenShift 4.11 it is possible to manage alerting rules for user-defined projects . Similarly, in ROSA clusters the OpenShift Administrator can enable a second AlertManager instance in the user workload monitoring namespace which can be used to create such alerts.

Note: Currently this is not a managed feature of ROSA. Such an implementation may get overwritten if the User Workload Monitoring functionality is toggled off and on using the OpenShift Cluster Manager (OCM). We

Prerequisites

- AWS CLI

- A Red Hat OpenShift for AWS (ROSA) cluster 4.11.0 or higher

Create Environment Variables

Configure User Workload Monitoring to include AlertManager

Edit the user workload config to include AlertManager

Note: If you have other modifications to this config, you will need to hand edit the resource rather than brute forcing it like below.

cat << EOF | oc apply -f - apiVersion: v1 kind: ConfigMap metadata: name: user-workload-monitoring-config namespace: openshift-user-workload-monitoring data: config.yaml: | alertmanager: enabled: true enableAlertmanagerConfig: true EOFVerify that a new Alert Manager instance is defined

oc -n openshift-user-workload-monitoring get alertmanagerNAME VERSION REPLICAS AGE user-workload 0.24.0 2 2mIf you want non-admin users to be able to define alerts in their own namespaces you can run the following.

oc -n <namespace> adm policy add-role-to-user alert-routing-edit <user>Create a Slack webhook integration in your Slack workspace. Create environment variables for it.

SLACK_API_URL=https://hooks.slack.com/services/XXX/XXX/XXX SLACK_CHANNEL='#paultest'Update the Alert Manager Configuration file

This will create a basic AlertManager configuration to send alerts to your slack channel. If you have an existing configuration you’ll need to edit it, not brute force paste like shown.

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: alertmanager-user-workload namespace: openshift-user-workload-monitoring stringData: alertmanager.yaml: | global: slack_api_url: "${SLACK_API_URL}" route: receiver: Default group_by: [alertname] receivers: - name: Default slack_configs: - channel: ${SLACK_CHANNEL} send_resolved: true EOF

Create an Example Alert

Create a Namespace for your custom alert

oc create namespace custom-alertVerify it works by creating a Prometheus Rule that will fire off an alert

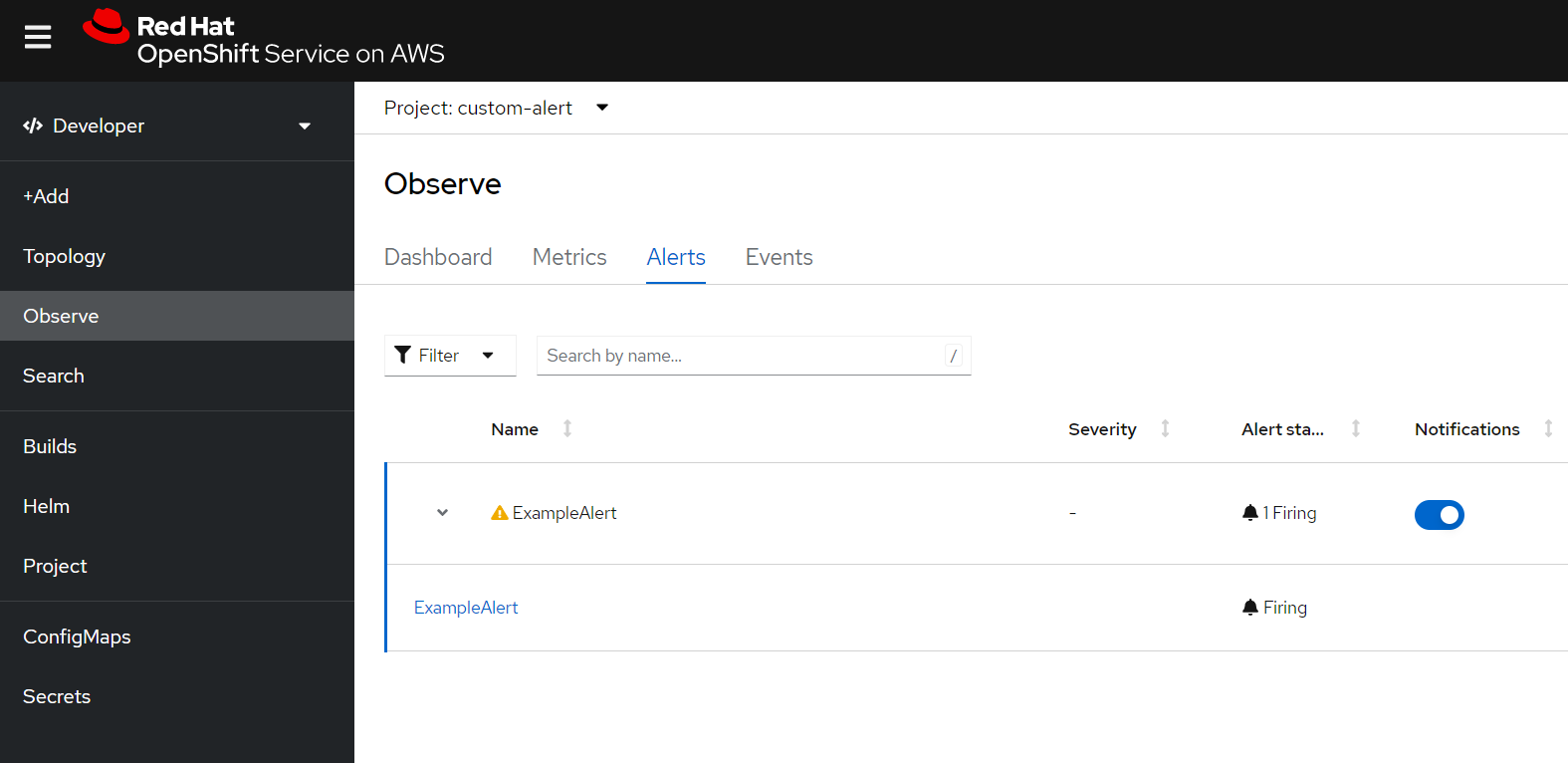

cat << EOF | oc apply -f - apiVersion: monitoring.coreos.com/v1 kind: PrometheusRule metadata: name: prometheus-example-rules namespace: custom-alert spec: groups: - name: example.rules rules: - alert: ExampleAlert expr: vector(1) EOFLog into your OpenShift Console and switch to the Developer view. Select the Observe item from the menu on the left and then click Alerts. Make your your Project is set to custom-alert

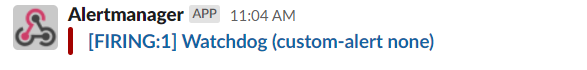

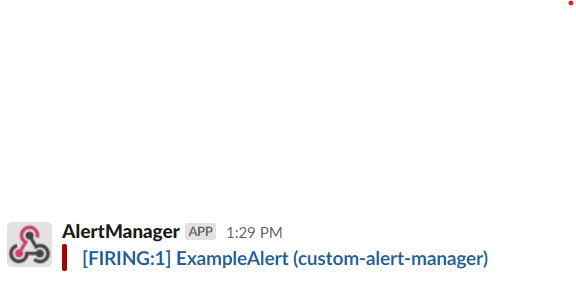

Check the Alert was sent to Slack

What about Cluster Alerts ?

By default, cluster alerts are only sent to Red Hat SRE and you cannot modify the cluster alert receivers. However you can make a copy of any of the alerts that you wish to see in your own alerting namespace which will then get picked up by the user workload alert-manager.

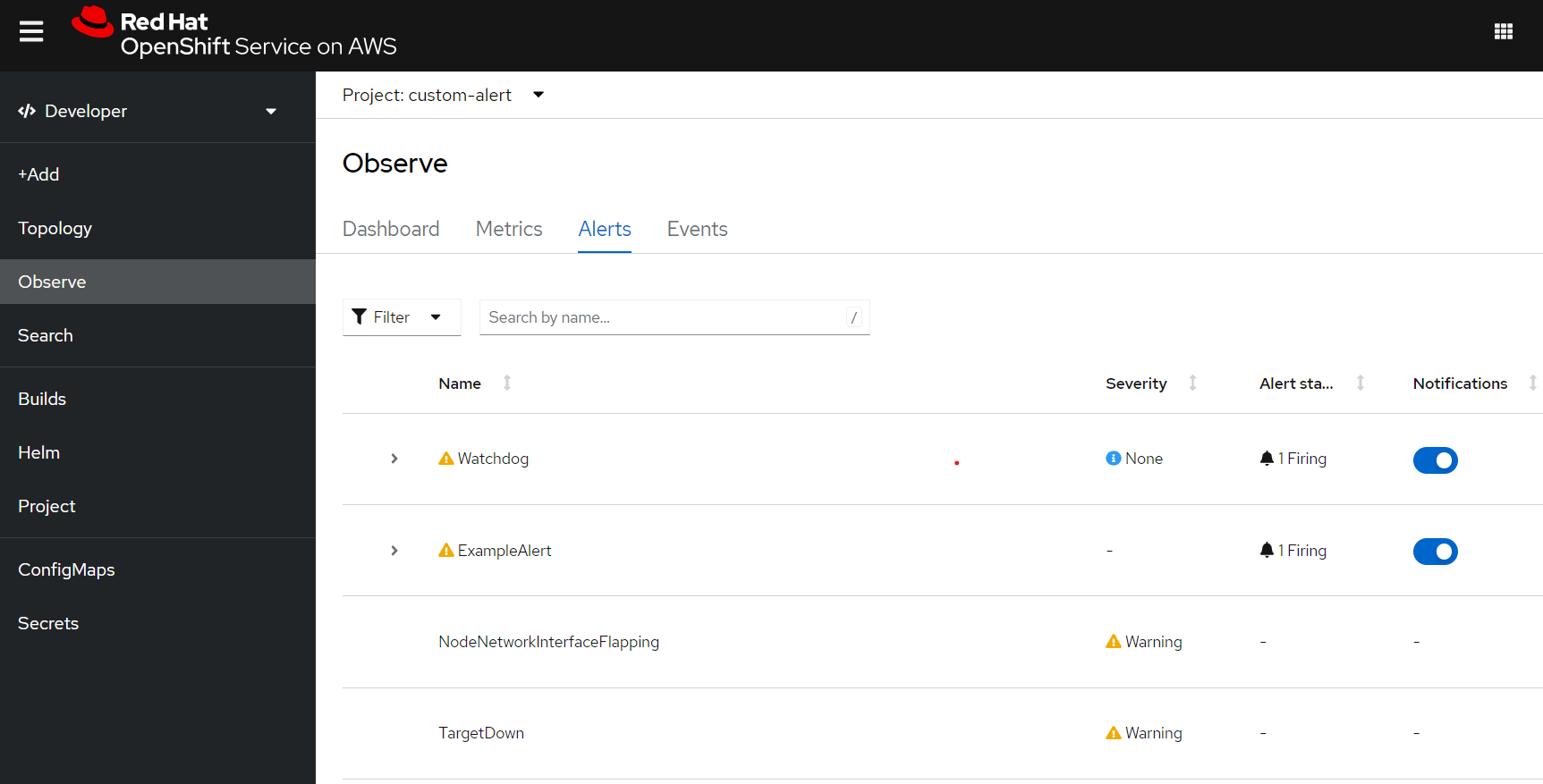

Duplicate a subset of the Cluster Monitoring Prometheus Rules

oc -n openshift-monitoring get prometheusrule \ cluster-monitoring-operator-prometheus-rules -o json | \ jq '.metadata.namespace = "custom-alert"' | \ kubectl create -f -Check the Alerts in the custom-alert Project in the OpenShift Console, and you’ll now see the Watchdog and other cluster alerts.

You should also have received an alert for the Watchdog alert in your Slack channel.